With or without AI we are facing a pandemic of slop

In his new book A Trick of the Mind experimental psychologist and cognitive neuroscientist Daniel Yon provides a thought-provoking take on AI. He proposes that the borders between human creativity and AI are more porous than the dominant narrative suggests, explaining that there is a close symmetry between what happens in AI's artificial neural networks, and what happens in the networks of our own minds.

Human evolution depends on random variability. Without genetic mutation there would be no variability and humans would never change, meaning every child would be an exact copy of their parents. Daniel Yon expands the work of social psychologist and scholar Donald T. Campbell to propose that this dependence on random mutation does not just apply to human evolution but also to creative evolution. So no random mutation in the creative process means no development. All creativity depends on taking old ideas and combining them in new, unusual, and sometime wrong ways. Extreme but identifiable examples of this creative process is the use of the I Ching chance divination tool by Philip K Dick, Allen Ginsberg, Jack Kerouac, William Burroughs, John Cage, and others.

Creativity occurs when the the neural network in our brain take old ideas and combines them in new, unusual, and sometime wrong ways. Which is exactly what AI does: AI operates in exactly the same way as the human brain, but across a very much larger dataset. These AI learning datasets are rapidly growing larger as computing power increases. Daniel Yon explains that at the present state of development an AI large language models is trained by parsing the equivalent of approximately one billion books. Reading at the rate of ten books a week, every year without break, it would take a human two million years to read the dataset a single AI agent parses in its training process.

At this point I progress beyond Daniel Yon's objective and scholarly research into more subjective territory. First, the old chestnut of the Infinite Monkeys Theorem demands attention. An infinite number of monkeys typing for infinite time may not write the complete works of Shakespeare. But there is a case for arguing that, eventually, AI could produce literary masterworks. Because a monkey typing is a purely random process. Whereas AI learns by an integral feedback loop, which progressively replaces randomness with intentionality. AI is at early stage of development. Henry VI Part II, Shakespeare's first play based on history, was probably written in 1591 when he was 27. By which time the playwright was well passed his early stage of development, and he had done a lot of parsing of texts and benefitted from years of feedback loops.

Then there is the other old chestnut of the laughable and sometimes dangerous random errors in AI-generated content. But if you asked a five-year-old to write a historical drama, the result would resemble the much-derided AI slop. Similarly if you asked AI to compose a short concert piece for piano, the resulting four minutes and thirty-three seconds of silence would be dismissed as AI slop.

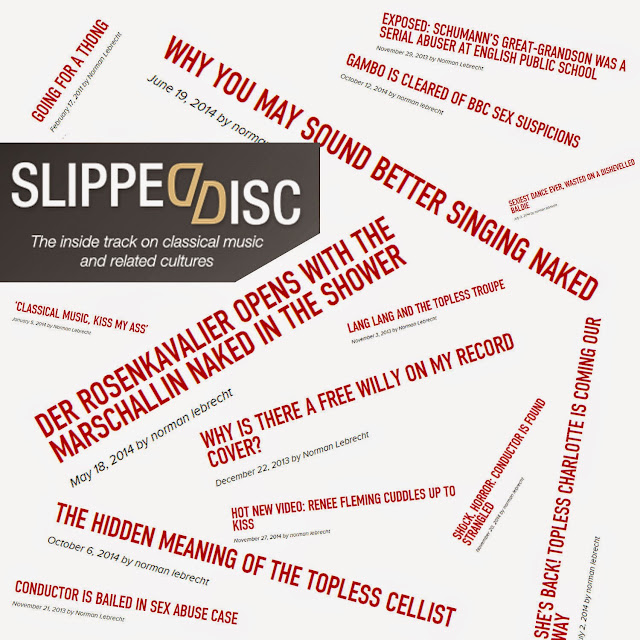

And let's not forget, AI does not have a monopoly on slop. AI teaching data sets are being inflated by both human and AI slop. Isn't MSN click bait slop? Isn't Netflix algorithm slop? Isn't social media humanoid slop? Isn't BBC Radio 3 Classic FM slop? Isn't Melania political slop? Isn't An Overgrown Path self-serving slop?

Generation Slop is replacing Generation Z, and there will be an awful lot more slop before AI writes the next literary masterwork. But we need to see beyond this. AI is a classic technology-driven disrupter that will not go away. Just like the internet, music streaming, social media, etc. etc. it will cause a major and painful disruption of the creative industries, hitting particularly badly the current fiscal model. It can be argued that central government could have mitigated this impact by, for instance, surcharging AI companies and diverting this revenue to human creativity. But this was never likely to happen, as central government is always on the side of big-tech. Just one example of this laissez-faire is how social media has been allowed to grow without control despite its major impact on the established media, and more importantly, mental health. Similarly AI will be allowed to grow with minor controls and major impacts.

With or without AI we are facing a pandemic of slop. Which is an ambiguous and not very optimistic conclusion I am afraid. But we need a better understanding of AI, because it is not going away. If it is any consolation, as Daniel Yon explains, the human brain created AI by modelling its own neural system. So the many strengths and weaknesses of AI are not dissimilar to our human strengths and many weaknesses. And just as we must learn to live with humans we don't like, we will have to learn to live with AI.

Comments